I am looking at a visual status display board. It has all of the usual things, safety information, 5S audit score, quality metrics.

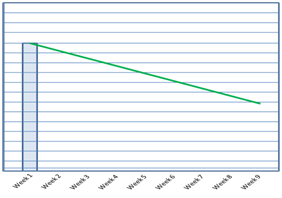

The quality metrics display is pretty typical – there is a target trend line heading down, and a provision for bars representing actual. There is only one bar right now – it looks like this:

Clearly there is a target for next week that is lower than the results from this week.

Equally clearly is a desire to reduce defects by about half over a couple of months.

This is an effective way to display results.

But results of what?

As a manager, when I look at this board, there is one critical thing that is missing:

What are we doing to impact the outcome? What problems are keeping them from reaching the target now?

What problem are they working on at the moment?

What do they understand about that problem?

What reduction do they anticipate achieving if that problem is solved?

In a “Problems First” culture, we want focus to be on what problems we are working on right now. The results follow.

Even if the bars were marching along, ever lower, under the trend line, I am not satisfied unless I can understand why. What problems are being solved? Are they being solved the right way? Because I know it is impossible to measure yourself into different performance, I have to seek out understanding of what, exactly, is impacting these numbers. To do otherwise is to neglect my responsibility to verify that the issues are being addressed in the right way.

I’m surprised there are no comments on this post. To me this describes the essence of lean thinking and the disconnect that there often is with it. The essence of lean isn’t 5S, Heijunka, or any other tool. It’s the ability to identify problems, implement a countermeasure, check the results of the countermeasure, and then restart PDCA if you didn’t see the expected results. It’s not rocket science but I’m always surprised at how often training and consultants skip right over that part. Does it not sound as sexy as something like 5S?

As lean practitioners it’s our job to teach and coach people to learn about their processes. Every action in that process should have a predictable outcome, if it doesn’t you have more learning to do and problems to fix. I often hear of leaders touting performance improvements and showing graphs just like the one you displayed. When we really dig in we find the improvements related to things like product mix, material cost, or a variety of other things that had nothing to do with process improvements.

The concept that the entire point of “the tools” is to power the PDCA loop is just emerging from an abstract concept.

The “tools” are “operations” and “problem solving” is regarded as something you do as a separate activity.

Further, PDCA is taught as an abstract concept, then people move on to “other topics” rather than making it the center of everything.

Just look at how you were taught in your own company’s “workshop leader certification” vs. the comments you are making here, and you can see the difference.